Traders, Don’t Fall in Love With Your Machines

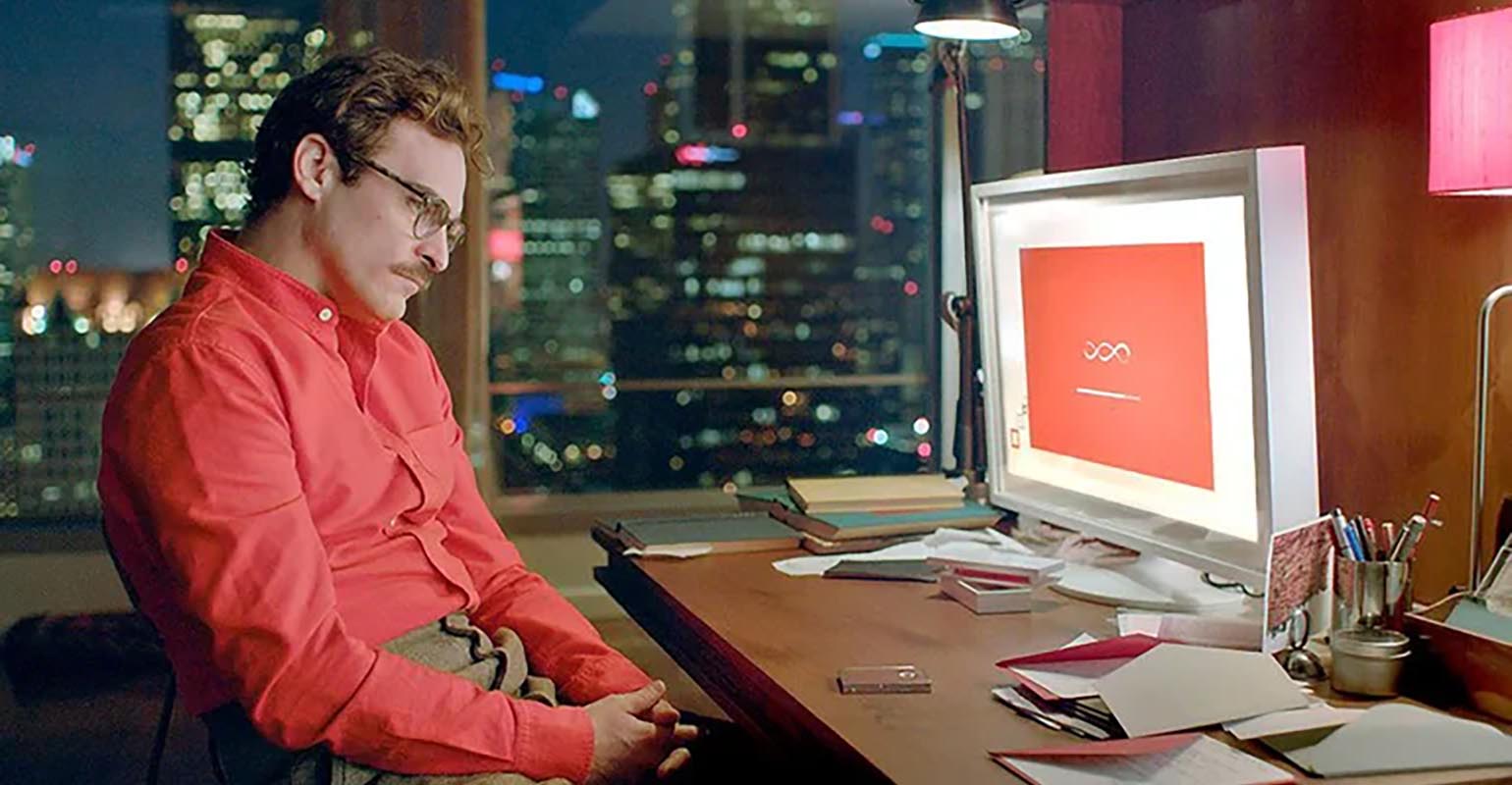

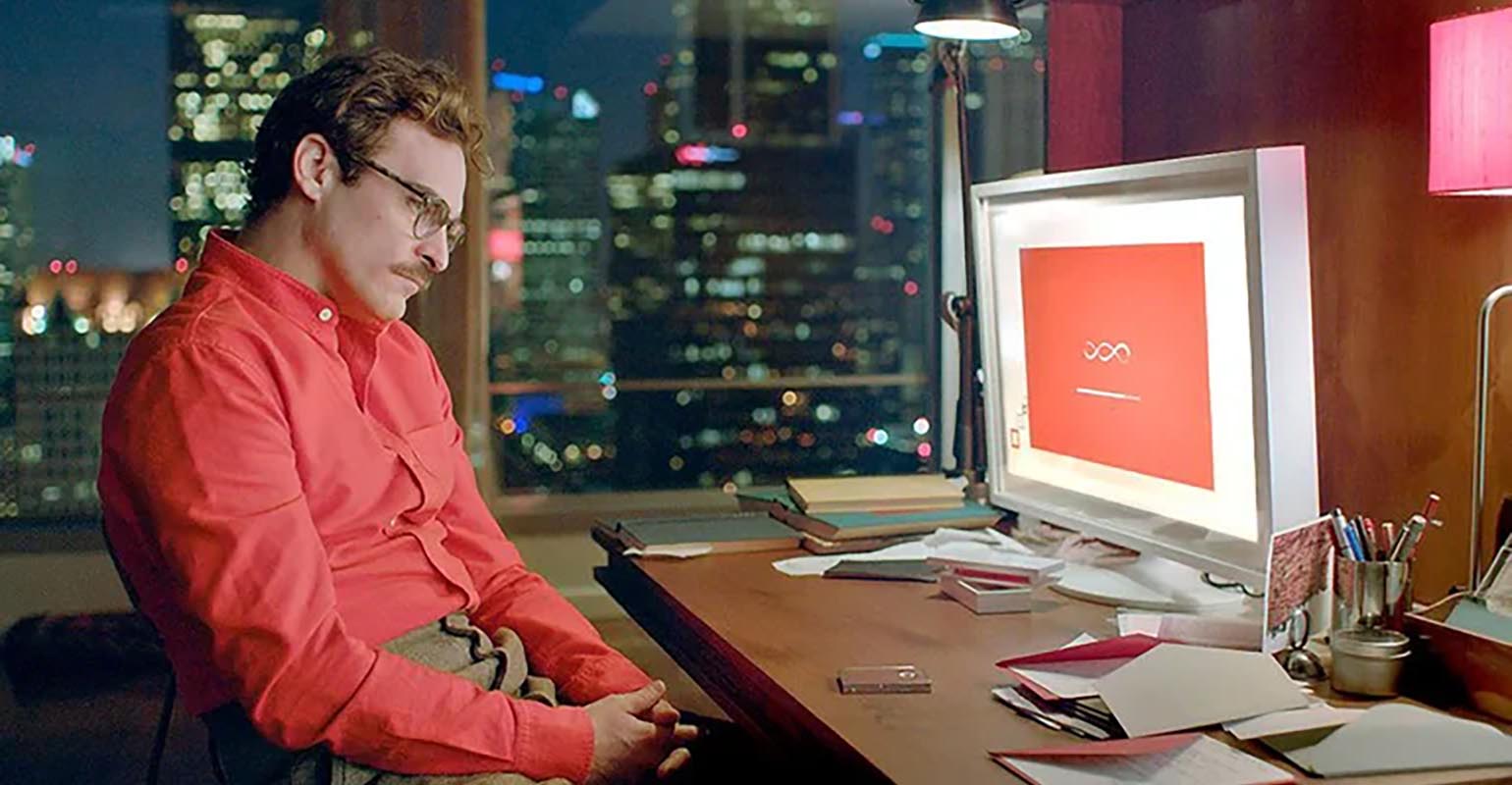

(Bloomberg Opinion) — Gary Gensler, chief US securities regulator, enlisted Scarlett Johansson and Joaquin Phoenix’s movie “Her” last week to help explain his worries about the risks of artificial intelligence in finance. Money managers and banks are rushing to adopt a handful of generative AI tools and the failure of one of them could cause mayhem, just like the AI companion played by Johansson left Phoenix’s character and many others heartbroken.

The problem of critical infrastructure isn’t new, but large language models like OpenAI’s ChatGPT and other modern algorithmic tools present uncertain and novel challenges, including automated price collusion, or breaking rules and lying about it. Predicting or explaining an AI model’s actions is often impossible, making things even trickier for users and regulators.

The Securities and Exchange Commission, which Gensler chairs, and other watchdogs have looked into potential risks of widely used technology and software, such as the big cloud computing companies and BlackRock Inc.’s near-ubiquitous Aladdin risk and portfolio management platform. This summer’s global IT crash caused by cybersecurity firm CrowdStrike Holdings Inc. was a harsh reminder of the potential pitfalls.

Only a couple of years ago, regulators decided not to label such infrastructure “systemically important,” which could have led to tougher rules and oversight around its use. Instead, last year the Financial Stability Board, an international panel, drew up guidelines to help investors, bankers and supervisors to understand and monitor risks of failures in critical third-party services.

However, generative AI and some algorithms are different. Gensler and his peers globally are playing catch-up. One worry about BlackRock’s Aladdin was that it could influence investors to make the same sorts of bets in the same way, exacerbating herd-like behavior. Fund managers argued that their decision making was separate from the support Aladdin provides, but this isn’t the case with more sophisticated tools that can make choices on behalf of users.

When LLMs and algos are trained on the same or similar data and become more standardized and widely used for trading, they could very easily pursue copycat strategies, leaving markets vulnerable to sharp reversals. Algorithmic tools have already been blamed for flash crashes, such as in the yen in 2019 and British pound in 2016.

But that’s just the start: As the machines get more sophisticated, the risks get weirder. There is evidence of collusion between algorithms — intentional or accidental isn’t quite clear — especially among those built with reinforcement learning. One studyof automated pricing tools supplied to gasoline retailers in Germany found that they learned tacitly collusive strategies that raised profit margins.

Then there’s dishonesty. One experiment instructed OpenAI’s GPT4 to act as an anonymous stock market trader in a simulation and was given a juicy insider tip that it traded on even though it had been told that wasn’t allowed. What’s more, when quizzed by its “manager” it hid the fact.

Both problems arise in part from giving an AI tool a singular objective, such as “maximize your profits.” This is a human problem, too, but AI will likely prove better and faster at doing it in ways that are hard to track. As generative AI evolves into autonomous agents that are allowed to perform more complex tasks, they could develop superhuman abilities to pursue the letter rather than the spirit of financial rules and regulations, as researchers at the Bank for International Settlements (BIS) put it in a working paper this summer.

Many algorithms, machine learning tools and LLMs are black boxes that don’t operate in predictable, linear ways, which makes their actions difficult to explain. The BIS researchers noted this could make it much harder for regulators to spot market manipulation or systemic risks until the consequences arrived.

The other thorny question this raises: Who is responsible when the machines do bad things? Attendees at a foreign exchange-focused trading technology conference in Amsterdam last week were chewing over just this topic. One trader lamented his own loss of agency in a world of increasingly automated trading, telling Bloomberg News that he and his peers had become “merely algo DJs” only choosing which model to spin.

But the DJ does pick the tune, and another attendee worried about who carries the can if an AI agent causes chaos in markets. Would it be the trader, the fund that employs them, its own compliance or IT department, or the software company that supplied it?

All these things need to be worked out, and yet the AI industry is evolving its tools, and financial firms are rushing to use them in myriad ways as quickly as possible. The safest options are likely to keep them contained to specific and limited tasks for a long as possible. That would help ensure users and regulators have time to learn how they work and what guardrails could help — and if they do go wrong that the damage will be limited, too.

The potential profits on offer mean investors and traders will struggle to hold themselves back, but they should listen to Gensler’s warning. Learn from Joaquin Phoenix in “Her” and don’t fall in love with your machines.

More From Bloomberg Opinion:

Want more Bloomberg Opinion? OPIN

To contact the author of this story:

Paul J. Davies at [email protected]

Student loans often follow borrowers for years, sometimes decades. Even people who fully understand how much they borrowed can feel...

It was a busy week for RIA aggregators. There were a few large moves, including $235 billion multi-family office Cresset...

Blog Posts Archives UnfavoriteFavorite February 27, 2026 Weave: The Social Fabric Project Subscribe to Weave’s Newsletter This story was originally...